Biden robocall: Audio deepfake fuels chaos

WASHINGTON — The 2024 White House race faces the prospect of a fire hose of artificial intelligence-enabled disinformation, with a robocall impersonating US President Joe Biden already stoking particular alarm about audio deepfakes.

"What a bunch of malarkey," said the phone message, digitally spoofing Biden's voice and echoing one of his signature phrases.

The robocall urged New Hampshire residents not to cast ballots in the Democratic primary last month, prompting state authorities to launch a probe into possible voter suppression.

It also triggered demands from campaigners for stricter guardrails around generative AI tools or an outright ban on robocalls.

Disinformation researchers fear rampant misuse of AI-powered applications in a pivotal election year because of proliferating voice-cloning tools, which are cheap, easy to use and hard to trace.

"This is certainly the tip of the iceberg," Vijay Balasubramaniyan, chief executive and co-founder of cybersecurity company Pindrop, said. "We can expect to see many more deepfakes throughout this election cycle."

A detailed analysis published by Pindrop said a text-to-speech system developed by AI voice-cloning startup ElevenLabs was used to create the Biden robocall.

The scandal came as campaigners on both sides of the US political aisle harness advanced AI tools for effective campaign messaging, and as tech investors pump millions of dollars into voice-cloning startups.

ElevenLabs did not respond to repeated requests for comment. Its website leads users to a free text-to-speech generator to "create natural AI voices instantly in any language".

Under its safety guidelines, the company said users were allowed to generate voice clones of political figures, such as former US president Donald Trump, without their permission if they "express humor or mockery" in a way that makes it "clear to the listener that what they are hearing is a parody, and not authentic content".

Regulators in the United States have been considering making AI-generated robocalls illegal, with the fake Biden call giving the effort new impetus.

"The political deepfake moment is here," Robert Weissman, president of advocacy group Public Citizen, said. "Policymakers must rush to put in place protections or we're facing electoral chaos. The New Hampshire deepfake is a reminder of the many ways that deepfakes can sow confusion."

The ease of creating and disseminating fake audio content complicates an already hyperpolarized political landscape, undermining confidence in the media and enabling anyone to claim that fact-based "evidence has been fabricated", said Wasim Khaled, chief executive of Blackbird. AI.

Balasubramaniyan said, "It is imperative that there are enough safeguards available in these tools."

He and other researchers recommended building audio watermarks or digital signatures into tools as possible protections, as well as regulation that makes them available only for verified users.

Agencies Via Xinhua

Today's Top News

- China-Cambodia-Thailand foreign ministers' meeting held, press communique issued

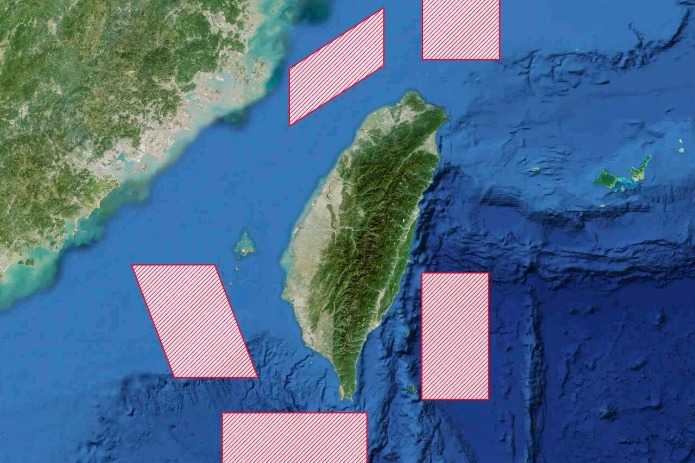

- Drills demonstrate China's resolve to defend sovereignty against external interference

- Trump says 'a lot closer' to Ukraine peace deal following talks with Zelensky

- China pilots L3 vehicles on roads

- PLA conducts 'Justice Mission 2025' drills around Taiwan

- Partnership becomes pressure for Europe